用python爬虫爬取携程火车票网址信息并保存

2021-01-12 20:02:12 1611浏览

用python爬虫爬取携程火车票网址信息并保存

今天分享的内容是:用python爬虫爬取携程火车票网址信息并保存

目得:爬取携程网址

火车 中得单程与中转

单程

url=“https://trains.ctrip.com/trainbooking/search?tocn=%25e5%258d%2583%25e5%25b2%259b%25e6%25b9%2596&fromcn=%25e6%259d%25ad%25e5%25b7%259e&day=2020-12-31”

中转

url=“https://trains.ctrip.com/pages/booking/hubSingleTrip?ticketType=2&fromCn=%25E6%259D%25AD%25E5%25B7%259E&toCn=%25E5%258D%2583%25E5%25B2%259B%25E6%25B9%2596&departDate=2020-12-31”

采用parse.quote()进行url转码

采用csv进行数据保存

random.choice进行选择一个User Agent 自认为这是个不错得习惯

携程单程信息在原网页源代码中

携程中转网址火车中中转信息保存在json文件中(js_url)

LET’S GO

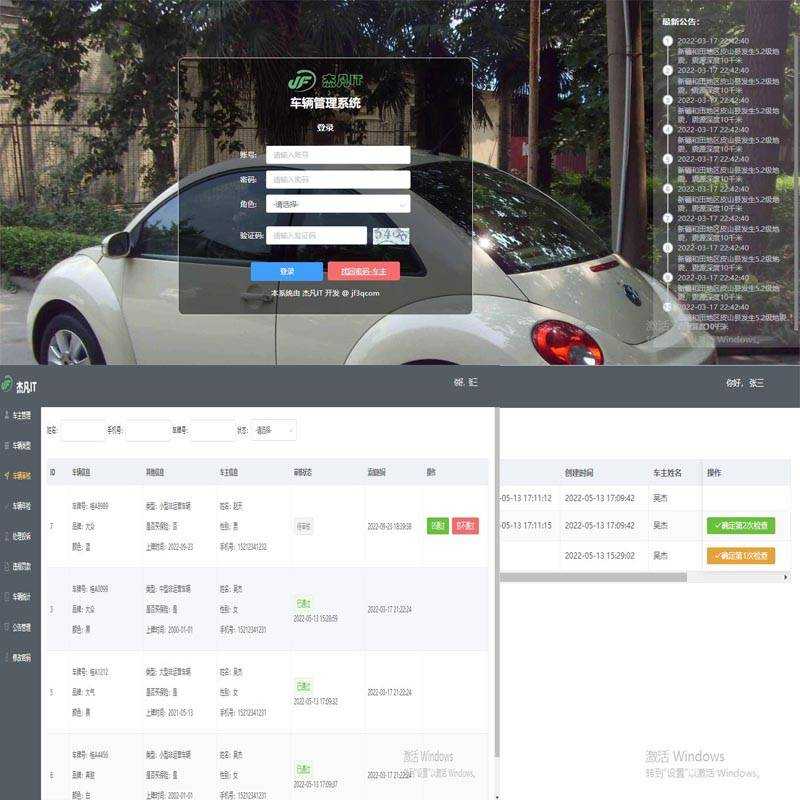

行,今天就给大家分享到这里吧,您的一份支持就是我最大的动力,最后打个小广告,我们程序员在学习和工作中或多或少会遇到一些比较棘手的问题,也就所谓的一时半会解决不了的bug,可以来杰凡IT问答平台上提问,平台上大佬很多可以快速给你一对一解决问题,有需要的朋友可以去关注下,平台网址: https://www.jf3q.com

目得:爬取携程网址

火车 中得单程与中转

单程

url=“https://trains.ctrip.com/trainbooking/search?tocn=%25e5%258d%2583%25e5%25b2%259b%25e6%25b9%2596&fromcn=%25e6%259d%25ad%25e5%25b7%259e&day=2020-12-31”

中转

url=“https://trains.ctrip.com/pages/booking/hubSingleTrip?ticketType=2&fromCn=%25E6%259D%25AD%25E5%25B7%259E&toCn=%25E5%258D%2583%25E5%25B2%259B%25E6%25B9%2596&departDate=2020-12-31”

采用parse.quote()进行url转码

采用csv进行数据保存

random.choice进行选择一个User Agent 自认为这是个不错得习惯

携程单程信息在原网页源代码中

携程中转网址火车中中转信息保存在json文件中(js_url)

LET’S GO

url="https://trains.ctrip.com/pages/booking/hubSingleTrip?ticketType=5&fromCn=%25E6%259D%25AD%25E5%25B7%259E&toCn=%25E6%2596%25B0%25E4%25B9%25A1&departDate=2020-12-30" # 携程单程火车原网址 查询参数 fromcn 出发站 tocn 目得站 departDate 日期总结一下还是不难得,基础打牢,python类函数运用 bs4解析(感觉比xpath直观)然后浏览器network中xhr就简单看看写一下就行.

#原网页查询参数需要进行两次url编码(注意点1)

#携程单程信息在原网页源代码中

'''

url="https://trains.ctrip.com/pages/booking/hubSingleTrip?ticketType=2&fromCn=%25E6%259D%25AD%25E5%25B7%259E&toCn=%25E5%258D%2583%25E5%25B2%259B%25E6%25B9%2596&departDate=2020-12-31"

js_url="https://trains.ctrip.com/pages/booking/getTransferList?departureStation=%2525E6%25259D%2525AD%2525E5%2525B7%25259E&arrivalStation=%2525E6%252596%2525B0%2525E4%2525B9%2525A1&departDateStr=2020-12-30"

携程中转网址火车中中转信息保存在json文件中(js_url) 查询参数departureStation arrivalStation departDateStr

类似稍加自己比较即可发现

js_url查询参数需要进行三次url编码(注意点2)

'''

from urllib import parse

import random

from bs4 import BeautifulSoup

import csv

import os

import requests

# print(parse.unquote((parse.unquote("%25E6%259D%25AD%25E5%25B7%259E"))))

fromArea = input("出发站")

toArea = input("目得站")

date=input("年-月-日 :")

if not os.path.exists("D:/携程查找练习"):#创建后续保存文件

os.mkdir("D:/携程查找练习")

class NewsByTransfer():#该类用于爬取中转得信息

def __init__(self):#初始化

self.fromArea=fromArea

self.toArea=toArea

self.date=date

def getOneJsUrl(self,fromArea,toArea,date):#进行js_url拼接

fromArea=parse.quote(parse.quote(fromArea))

departureStation=parse.quote(fromArea)

toArea=parse.quote(parse.quote(toArea))

arrivalStation=parse.quote(toArea)

url="https://trains.ctrip.com/pages/booking/hubSingleTrip?ticketType=5&fromCn="+fromArea+"&toCn="+toArea #原网页

js_url="https://trains.ctrip.com/pages/booking/getTransferList?departureStation="+departureStation+"&arrivalStation="+arrivalStation

js_url=js_url+"&departDateStr="+date

# print(url)

print(js_url)

return js_url

def getOneNews(self,js_url):#爬取js_url信息

UA = [

"Mozilla/5.0 (Windows NT 6.3; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/39.0.2171.95 Safari/537.36",

"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_9_2) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/35.0.1916.153 Safari/537.36",

"Mozilla/5.0 (Windows NT 6.1; WOW64; rv:30.0) Gecko/20100101 Firefox/30.0",

"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_9_2) AppleWebKit/537.75.14 (KHTML, like Gecko) Version/7.0.3 Safari/537.75.14",

"Mozilla/5.0 (compatible; MSIE 10.0; Windows NT 6.2; Win64; x64; Trident/6.0)",

'Mozilla/5.0 (Windows; U; Windows NT 5.1; it; rv:1.8.1.11) Gecko/20071127 Firefox/2.0.0.11',

'Opera/9.25 (Windows NT 5.1; U; en)',

'Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; .NET CLR 1.1.4322; .NET CLR 2.0.50727)',

'Mozilla/5.0 (compatible; Konqueror/3.5; Linux) KHTML/3.5.5 (like Gecko) (Kubuntu)',

'Mozilla/5.0 (X11; U; Linux i686; en-US; rv:1.8.0.12) Gecko/20070731 Ubuntu/dapper-security Firefox/1.5.0.12',

'Lynx/2.8.5rel.1 libwww-FM/2.14 SSL-MM/1.4.1 GNUTLS/1.2.9',

"Mozilla/5.0 (X11; Linux i686) AppleWebKit/535.7 (KHTML, like Gecko) Ubuntu/11.04 Chromium/16.0.912.77 Chrome/16.0.912.77 Safari/535.7",

"Mozilla/5.0 (X11; Ubuntu; Linux i686; rv:10.0) Gecko/20100101 Firefox/10.0 "

]

user_agent = random.choice(UA)

text=requests.get(js_url,headers={"User-Agent":user_agent}).json() #获取json字符串用于python字典处理

transferList=text["data"]["transferList"]#第一次定位主要信息列表

csvList=[]#创建csv后续dictwriter写入 保存列表

for oneTransfer in transferList:

# print(oneTransfer)

tranDict={}

tranDict["总出发站"] = oneTransfer["departStation"]

tranDict["总目得站"] = oneTransfer["arriveStation"]

tranDict["总信息"] = oneTransfer["transferStation"] + "换乘 停留" + oneTransfer["transferTakeTime"] + " 全程" + \

oneTransfer["totalRuntime"] + " 价格" + oneTransfer['showPriceText']

trainTransferInfosList=oneTransfer["trainTransferInfos"]

for trainTransferInfos in trainTransferInfosList:

tranDict[f"班次列车号{trainTransferInfos['sequence']}"]=trainTransferInfos['trainNo']

tranDict[f"发车时间-到站时间{trainTransferInfos['sequence']}"]=trainTransferInfos['departDate']+" "+ \

trainTransferInfos['departTime']+"---"+trainTransferInfos['arriveDate']+" "+trainTransferInfos['arriveTime']

tranDict[f"发车站-目得站{trainTransferInfos['sequence']}"]=trainTransferInfos[ 'departStation']+"---" +\

trainTransferInfos["arriveStation"]

csvList.append(tranDict)

print(csvList)

return csvList

def mkcsv(self,csvlist):#创建csv文件

with open(f"D:/携程查找练习/{csvlist[0]['总出发站']}到{csvlist[0]['总目得站']}转站查找.csv","w+",newline="",encoding="utf-8") as f:

writer = csv.DictWriter(f, list(csvlist[0].keys()))

writer.writeheader()

writer.writerows(csvlist)

def main(self):

js_url = self.getOneJsUrl(self.fromArea, self.toArea, self.date)

csvList = self.getOneNews(js_url)

self.mkcsv(csvList)

print(csvList)

class NewsBySingle():#爬取单程信息

def __init__(self):

self.fromArea=fromArea

self.toArea=toArea

self.date=date

def getOneUrl(self,fromArea,toArea,date):

fromArea=parse.quote(parse.quote(fromArea))

toArea=parse.quote(parse.quote(toArea))

url="https://trains.ctrip.com/trainBooking/search?ticketType=0&fromCn="+fromArea+"&toCn="+toArea+"&day="+self.date+"&mkt_header=&orderSource="

# print(url)

print(url)

return url

def getOneNews(self,url):

UA = [

"Mozilla/5.0 (Windows NT 6.3; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/39.0.2171.95 Safari/537.36",

"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_9_2) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/35.0.1916.153 Safari/537.36",

"Mozilla/5.0 (Windows NT 6.1; WOW64; rv:30.0) Gecko/20100101 Firefox/30.0",

"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_9_2) AppleWebKit/537.75.14 (KHTML, like Gecko) Version/7.0.3 Safari/537.75.14",

"Mozilla/5.0 (compatible; MSIE 10.0; Windows NT 6.2; Win64; x64; Trident/6.0)",

'Mozilla/5.0 (Windows; U; Windows NT 5.1; it; rv:1.8.1.11) Gecko/20071127 Firefox/2.0.0.11',

'Opera/9.25 (Windows NT 5.1; U; en)',

'Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; .NET CLR 1.1.4322; .NET CLR 2.0.50727)',

'Mozilla/5.0 (compatible; Konqueror/3.5; Linux) KHTML/3.5.5 (like Gecko) (Kubuntu)',

'Mozilla/5.0 (X11; U; Linux i686; en-US; rv:1.8.0.12) Gecko/20070731 Ubuntu/dapper-security Firefox/1.5.0.12',

'Lynx/2.8.5rel.1 libwww-FM/2.14 SSL-MM/1.4.1 GNUTLS/1.2.9',

"Mozilla/5.0 (X11; Linux i686) AppleWebKit/535.7 (KHTML, like Gecko) Ubuntu/11.04 Chromium/16.0.912.77 Chrome/16.0.912.77 Safari/535.7",

"Mozilla/5.0 (X11; Ubuntu; Linux i686; rv:10.0) Gecko/20100101 Firefox/10.0 "

]

user_agent = random.choice(UA)

text=requests.get(url,headers={"User-Agent":user_agent}).content.decode("utf-8")

print(text)#获取源代码为后续bs4解析

soup=BeautifulSoup(text,"lxml")

oneTripList=soup.select("div.railway_list")

print(len(oneTripList))

oneTripNewList=[]

for oneTrip in oneTripList:

oneTripDict={}

print(oneTrip)

oneTripDict["班次列车号"]=oneTrip.select("strong")[0].string

oneTripDict["出发站名称"]=oneTrip.select("span")[0].string

oneTripDict["出发站时间"]=oneTrip.select("strong")[1].string

oneTripDict["中途时间"]=list(oneTrip.select("div.haoshi")[0].stripped_strings)[0]

oneTripDict["目得站名称"]=oneTrip.select("span")[1].string

oneTripDict["到站时间"]=oneTrip.select("strong")[2].string

print(oneTripDict)

oneTripNewList.append(oneTripDict)

print("---"*60)

print(oneTripNewList)

return oneTripNewList

def mkcsv(self,oneTripNewList):

with open(f"D:/携程查找练习/{oneTripNewList[0]['出发站名称']}到{oneTripNewList[0]['目得站名称']}单程查找.csv","w+",newline="",encoding="utf-8") as f:

writer = csv.DictWriter(f, list(oneTripNewList[0].keys()))

writer.writeheader()

writer.writerows(oneTripNewList)

def main(self):

url=self.getOneUrl(self.fromArea, self.toArea, self.date)

oneTripNewList=self.getOneNews(url)

self.mkcsv(oneTripNewList)

NewsByTransfer().main()

NewsBySingle().main()

行,今天就给大家分享到这里吧,您的一份支持就是我最大的动力,最后打个小广告,我们程序员在学习和工作中或多或少会遇到一些比较棘手的问题,也就所谓的一时半会解决不了的bug,可以来杰凡IT问答平台上提问,平台上大佬很多可以快速给你一对一解决问题,有需要的朋友可以去关注下,平台网址: https://www.jf3q.com

好博客就要一起分享哦!分享海报

此处可发布评论

评论(0)展开评论

暂无评论,快来写一下吧

展开评论

他的专栏

他感兴趣的技术

新业务

新业务  springboot学习

springboot学习  ssm框架课

ssm框架课  vue学习

vue学习